nlcom and the Delta Method in Stata

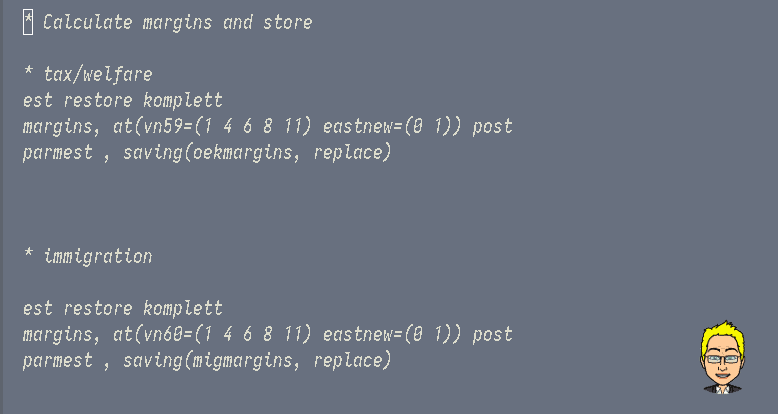

The delta method approximates the expectation of some function of a random variable by relying on a (truncated) Taylor series expansion. In plain words, that means that one can use the delta method to calculate confidence intervals and perform hypothesis tests on just about every linear or nonlinear transformation of a vector of parameter estimates. Stata’s procedure nlcom is a particularly versatile and powerful implementation of the delta method. If you can write down the formula of the transformation, nlcom will spit out the result. And that means that you can abuse Stata’s built in procedures to implement your own estimators.