Bad and utterly unsurprising: it sucks to have a non-Anglo name

Tag: bibliometrics

Video: using co-citation analysis with R to assess the chances of scientific communication

Terminology matters for science. If people use different words for the same thing, or even worse, the same word for different things, scientific communication turns into a dialogue of the deaf. European Radical Right Studies are a field where this is potentially a big problem: we use labels like “New”, “Populist”, “Radical”, “Extreme” or even…

Does use of Extreme Right / Radical Right terminology predict co-citations? (Part 2)

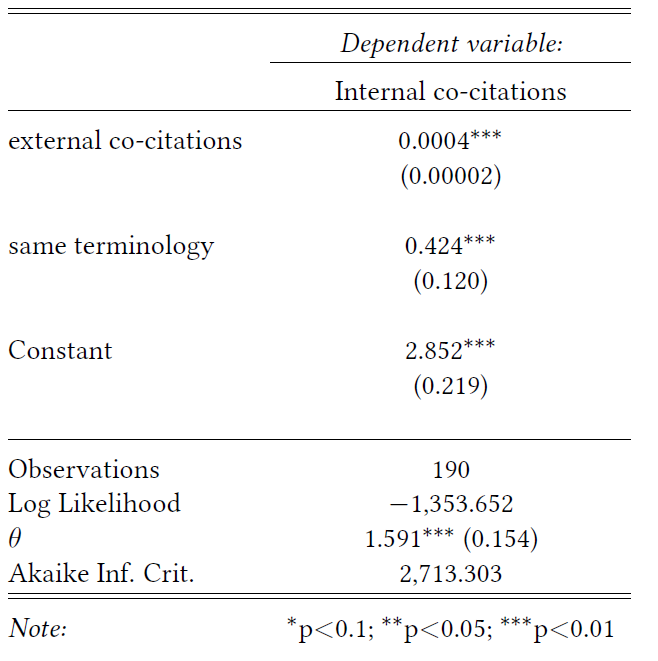

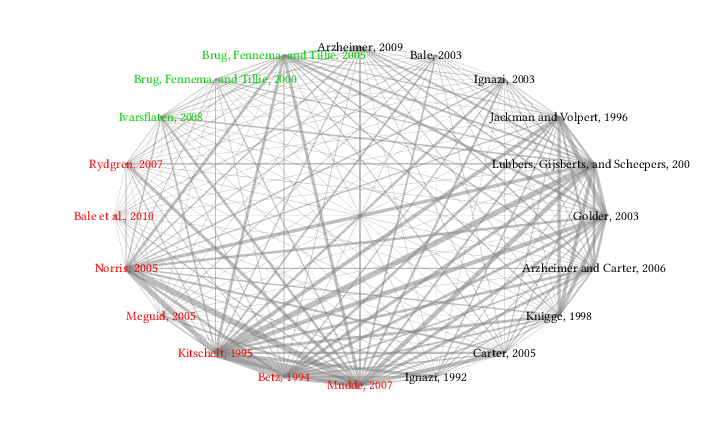

Reprise: The co-citation network in European Radical Right studies In the last post, I tried to reconstruct the co-citation network in European Radical Right studies and ended up with this neat graph. [caption id="attachment_28138" align="alignnone" width="907"] Co-citations within top 20 titles in Extreme / Radical Right studies[/caption] The titles are arranged in groups, with the…

The Extreme / Radical Right research network of co-citations: evidence for 2 different schools? (Part 1)

Research question For a long time, people working in the field of European Radical Right Studies could not even agree on a common name for the thing that they were researching. Should it be the Extreme Right, the Radical Right, or what? Utterly unimpressed by this fact, I argue in a in-press contribution that this…

Data on Knowledge Networks in Political Science Published

Replication data for our recent article on knowledge networks in Political Science are available from my dataverse

Article on Networks in Political Science Published

Harald’s and my article on citation and collaboration networks in German and British Political Science has finally appeared in print and online, which is obviously great. Here is the abstract: Citations and co-publications are one important indicator of scientific communication and collaboration. By studying patterns of citation and co-publication in four major European Political Science…

Presentation: Knowledge Networks in European Political Science

[caption id="" align="alignright" width="227"] Worldwide mutual citations in Political Science[/caption] Last Saturday, we presented our ongoing work on collaboration and citation networks in Political Science at the 4th UK Network conference held at the University of Greenwich. For this conference, we created a presentation on Knowledge Networks in European Political Science that summarises most of…

Software for Social Network Analysis: Pajek and Friends

After trying a lot of other programs, we have chosen Pajek for doing the analyses and producing those intriguing graphs of cliques and inner circles in Political Science. Pajek is free for non-commercial use and runs on Windows or (via wine) Linux. It is very fast, can (unlike many other programs) easily handle very large networks, produces decent graphs and does many standard analyses.