It’s that time of the electoral cycle again: With just under seven months to go until the federal election in September, I feel the urge to pool the German pre-election polls. I’ve burnt my fingers four years ago when I was pretty (though not 100%) sure that the FDP would clear the five per cent threshold (they failed for the first time in more than six decades), but hey – what better motivation to try it again?

Why bother with poll-pooling?

Lately (see Trump, Brexit), pre-election polls have been getting a bad rap. But there is good evidence that by and large, polls have not become worse in recent years. Polls, especially when taken long before the election, should not be understood as predictions, because people will change their mind on how to vote, or will not yet have made up their mind – in many cases, wild fluctuations in the polls will eventually lead to an equilibrium that could have been predicted many months in advance. Rather, polls reflect the current state of public opinion, which will be affected by campaign and real-world effects.

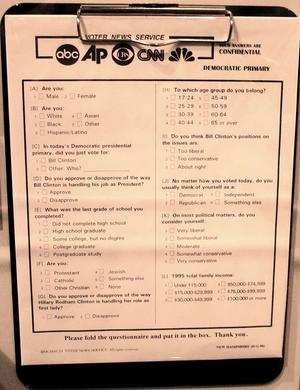

Per se, there is nothing wrong with wanting to track this development. The problem of horse race journalism/politics is largely a problem of over-interpreting the result of a single poll. A survey of 1000 likely voters that measures support for a single party at 40% would have a sampling error of +/- 3 percentage points if it was based on a simple random probability sample. In reality, polling firms rely on complicated multi-stage sampling frames, which will result in even larger sampling errors. Then there is systematic non-response: some groups a more difficult to contact than others. Polling firms therefore apply weighs, which may hopefully reduce the resulting bias but will further increase standard errors. And then there are house effects: Some quirk in their sampling frame or weighing scheme may cause polling firm A to consistently overreport support for party Z. So in general, if support for a party or candidate rises or drops by some three or four percentage points, this may create a flurry of comments and excitement. But more often than not, true support in the public may be absolutely stable or even move in the opposite direction.

Creating a poll of polls can alleviate these problems somewhat. By pooling information from many adjacent polls, more accurate estimates are possible. Moreover, house effects may cancel each other out. However, a poll of polls will not help with systematic bias that stems from social desirability. If voters are generally reluctant to voice support for a party that is perceived as extremist or otherwise unpopular, that will affect all polls in much the same way.

Moreover, poll-pooling raises a number of questions to which there are no obvious answers: How long should polls be retained in the pool over which one wants to average? How can we deal with the fact that there are sometimes long spells with no new polls, whereas at other times, several polls are published within a day or two? How do we factor in that a change in polling that is reflected across multiple companies is more likely to reflect a true shift in allegiances?

The method: Bayesian poll-pooling

Bayesian poll-pooling provides a principled solution to these (and other) issues. It was pioneered by Simon Jackman in his 2006 article on “Pooling the polls over an election campaign”. In Jackman’s model, true support for any given party is latent and follows random walk: support for

today is identical to what it was yesterday, plus (or minus) some tiny random shock. The true level of support is only ever observed on election day, but polls provide a glimpse into the current state of affairs. Unfortunately, that glimpse is biased by house effects, but if one is willing to assume that house effects average out across pollsters, these can be estimated and subsequently factored into the estimates for the true state of support for any given day.

The Bayesian paradigma is particularly attractive here, because it is flexible and because it easily incorporates the idea that we use polls to continuously update our prior beliefs about the state of the political play. It’s also easy to derive other quantities of interest from the distribution of the main estimates, such as the probability that there is currently enough support for the FDP to enter parliament, and, conditional on this event, that a centre-right coalition would beat a leftist alliance.

In my previous misguided attempt to pool the German polls, I departed from Jackman’s model in two ways. First, I added a “drift” parameter to the random walk to account for any long term trends in party support. That was not such a stupid idea as such (I think), but it made the model to inflexible to pick up that voters were ditching the FDP in the last two weeks before the election (presumably CDU supporters who had nursed the idea of a strategic vote for the FDP). Secondly, whereas Jackman’s model has a normal distribution for each party, I fiddled with a multinomial distribution, because Germany has a multi-party system and because vote share must sum up to unity.

The idea of moving to a Dirichlet distribution crossed my mind, but I lacked the mathematical firepower/Bugs prowess to actually specify such a model. Thankfully, I came across this blog, whose author has just done what I had (vaguely) in mind. By the way, it also provides a much better overview of the idea of Bayesian poll aggregation. My own model is basically his latent primary voting intention model (minus the discontinuity).

The one thing I’m not 100% sure about is the “tightness” factor. Like Jackman (and everyone else), the author assumes that most movement in the polls is noise, and that true day-to-day changes are almost infinitesimally small. This is reflected in the tightness factor, which he arbitrarily sets to 50000 after looking at this data. Smaller numbers make for more wiggly lines and wider confidence intervals, because more of the variability in the data is attributed to true change. Unfortunately, this number does not translate to a readily interpretable quantity of interest (say a true day-to-day change of 0.5 per cent).

After playing with smaller and even larger values, I came up with a cunning plan and made “tightness” a parameter in the model. For the first six weeks of polling data, the estimate for tightness is about an order of magnitude lower in a range between 3500 and 10000. Whether it is a good idea to ask my poor little stock of data for yet another parameter estimate is anyone’s guess, and I will have to watch how this estimate changes, and whether I’m better of to fix it again.

The data

Data come from seven major polling companies: Allensbach, Emnid, Forsa, FGW, GMS, Infratest Dimap, and INSA. The surveys are commissioned by various major newspapers, magazines, and TV channels. As far as I know, Allensbach is the only company that does face-to-face interviews, and INSA is the only company that relies on an internet access panel. Everyone else is doing telephone interviews. The headline margins are compiled and republished by the incredibly useful wahlrecht.de website: http://www.wahlrecht.de/umfragen/index.htm, which I scrape with a little help from the rvest package.

Replication (Updated)

The most recent version of the code for the model is here. And these are the data (zipped). You will also need this script to extract the data from the HTML pages, while this one should hopefully create the graphs.

Discover more from kai arzheimer

Subscribe to get the latest posts sent to your email.

RT @kai_arzheimer: From the vault: Aggregating the German pre-election polls https://t.co/N2js0lR2g9

RT @kai_arzheimer: From the vault: Aggregating the German pre-election polls https://t.co/N2js0lR2g9

RT @kai_arzheimer: Aggregating the German pre-election polls – kai arzheimer https://t.co/jGJk20risc #btw2017

RT @kai_arzheimer: Aggregating the German pre-election polls – kai arzheimer https://t.co/jGJk20risc #btw2017